The Rust CUDA Project: A Brief Overview

What is the project about?

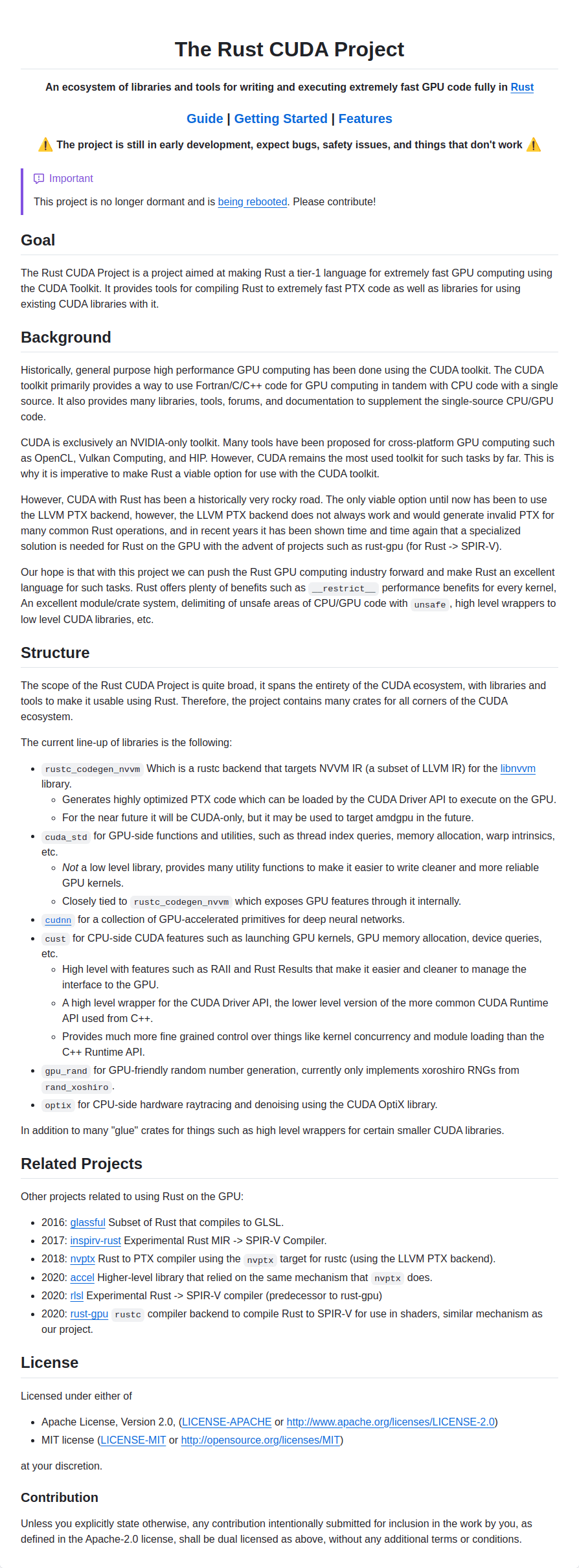

The Rust CUDA Project aims to make Rust a first-class language for high-performance GPU computing using NVIDIA's CUDA toolkit. It provides a complete ecosystem of tools and libraries to write, compile, and execute GPU-accelerated code entirely in Rust.

What problem does it solve?

- Historically difficult Rust/CUDA integration: Using Rust with CUDA has been challenging, relying on the often unreliable LLVM PTX backend, which could generate incorrect code. This project offers a dedicated, robust solution.

- Lack of a comprehensive Rust-CUDA ecosystem: Existing solutions were fragmented. This project provides a unified set of libraries covering various aspects of CUDA development, from kernel writing to deep learning and ray tracing.

- Leveraging Rust's advantages: It brings Rust's safety features (like

unsafeblocks), performance benefits (like__restrict__), and modern tooling (module system) to the GPU computing world.

What are the features of the project?

rustc_codegen_nvvm: Arustcbackend that compiles Rust code to highly optimized PTX (Parallel Thread Execution) code via NVVM IR (a subset of LLVM IR). This is the core of the project, enabling efficient GPU code generation.cuda_std: A GPU-side standard library providing essential functions for kernel development (thread management, memory allocation, warp intrinsics, etc.). It's designed for cleaner and more reliable kernel code.cust: A high-level, CPU-side library for interacting with the GPU. It handles tasks like kernel launching, memory management, and device queries, using Rust's RAII andResulttypes for safer and cleaner code. It wraps the CUDA Driver API.cudnn: Bindings for the cuDNN library, providing GPU-accelerated primitives for deep neural networks.gpu_rand: GPU-based random number generation (currently xoroshiro RNGs).optix: Bindings for the CUDA OptiX library, enabling hardware-accelerated ray tracing and denoising.- Glue crates: Additional libraries providing high-level wrappers for smaller CUDA libraries.

- Being Rebooted: The project is no longer dormant and is being actively developed.

What are the technologies used in the project?

- Rust: The primary programming language.

- CUDA Toolkit: NVIDIA's platform for GPU computing.

- NVVM IR: NVIDIA's intermediate representation, a subset of LLVM IR, used for code generation.

- PTX: NVIDIA's low-level virtual machine and instruction set architecture for GPUs.

- CUDA Driver API: The lower-level API for interacting with CUDA (compared to the CUDA Runtime API).

- cuDNN: NVIDIA's library for deep neural networks.

- OptiX: NVIDIA's library for ray tracing.

- LLVM: Used as part of the compilation process (NVVM IR is a subset of LLVM IR).

What are the benefits of the project?

- Performance: Generates highly optimized PTX code for maximum GPU performance.

- Safety: Leverages Rust's safety features (e.g.,

unsafeblocks) to improve code reliability and prevent common GPU programming errors. - Productivity: Provides high-level libraries and abstractions that simplify GPU development in Rust.

- Control: Uses the CUDA Driver API, offering finer-grained control over kernel concurrency and module loading compared to the C++ Runtime API.

- Unified Ecosystem: Offers a comprehensive set of tools and libraries for various CUDA-related tasks.

- Modern Tooling: Benefits from Rust's excellent module/crate system.

What are the use cases of the project?

- High-Performance Computing (HPC): Accelerating scientific simulations, data analysis, and other computationally intensive tasks.

- Deep Learning: Training and deploying deep neural networks using cuDNN.

- Ray Tracing and Graphics: Creating realistic visuals and simulations using OptiX.

- Game Development: Potentially for physics simulations, AI, or other GPU-intensive tasks (though the focus is on CUDA, not general-purpose GPU programming like

rust-gpu). - Any application requiring massive parallel processing: Where the computational power of GPUs can be leveraged.

Important Note: The project is still in early development, so users should expect potential bugs and limitations.