YOLOv9 Project Description

What is the project about?

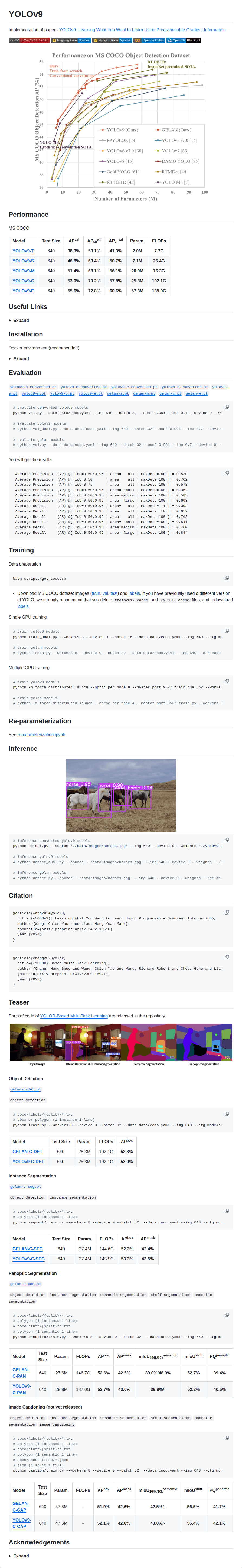

The project is an implementation of the YOLOv9 object detection model, based on the research paper "YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information." It represents an advancement in the YOLO (You Only Look Once) family of real-time object detectors.

What problem does it solve?

YOLOv9 addresses the issue of information loss in deep learning models, particularly in object detection. Traditional deep networks can lose crucial information as data passes through multiple layers. YOLOv9 introduces "Programmable Gradient Information" (PGI) to mitigate this, allowing the model to retain essential information for accurate object detection, even with very deep architectures. This leads to better performance, especially in scenarios with complex object interactions and occlusions.

What are the features of the project?

- Object Detection: The core feature is real-time object detection, identifying and locating objects within images or video frames.

- Multiple Model Sizes: Offers different model sizes (T, S, M, C, E) to balance speed and accuracy for various applications.

- Programmable Gradient Information (PGI): The key innovation that improves information retention and model accuracy.

- Generalized Efficient Layer Aggregation Network (GELAN): Combines the strengths of different network architectures.

- MS COCO Dataset Performance: Achieves state-of-the-art results on the challenging MS COCO benchmark.

- Integration and Deployment: Supports various deployment options, including ONNX, TensorRT, OpenVINO, TFLite, and ROS. There are also links to Hugging Face Spaces and Google Colab demos.

- Extensibility: The codebase is designed to be extended to other tasks, such as instance segmentation, panoptic segmentation, and even image captioning (some parts are still under development).

- Training and Evaluation Scripts: Provides scripts for training the model on custom datasets and evaluating its performance.

- Re-parameterization: Includes a notebook for re-parameterization, a technique to improve inference speed without sacrificing accuracy.

What are the technologies used in the project?

- Python: The primary programming language.

- PyTorch: The deep learning framework used for model implementation and training.

- CUDA (NVIDIA GPUs): Leverages GPUs for accelerated training and inference.

- ONNX: For exporting models to a standardized format for interoperability.

- TensorRT: For optimized inference on NVIDIA GPUs.

- OpenVINO: For optimized inference on Intel hardware.

- TFLite: For deployment on mobile and embedded devices.

- ROS (Robot Operating System): For integration with robotics applications.

- Docker: Provides a containerized environment for easy setup and reproducibility.

- Other Libraries: Seaborn, thop, OpenCV, and potentially others for visualization, model analysis, and image processing.

What are the benefits of the project?

- Improved Accuracy: PGI leads to higher accuracy in object detection compared to previous YOLO versions.

- Real-time Performance: Maintains the real-time capabilities of the YOLO family, making it suitable for applications requiring fast processing.

- Flexibility: The different model sizes allow users to choose the best trade-off between speed and accuracy for their specific needs.

- Deployability: Support for various deployment frameworks makes it easier to integrate the model into different environments.

- Open Source: The code is publicly available, allowing for community contributions and further development.

What are the use cases of the project?

- Autonomous Driving: Detecting objects like cars, pedestrians, and traffic signs.

- Robotics: Enabling robots to perceive and interact with their environment.

- Video Surveillance: Real-time object detection for security applications.

- Medical Image Analysis: Identifying objects of interest in medical images.

- Industrial Automation: Quality control and object tracking in manufacturing processes.

- Image and Video Editing: Automatic object selection and manipulation.

- Augmented Reality: Overlaying virtual objects onto the real world.

- Counting and Speed Estimation: As mentioned in the "Useful Links" section, the model can be adapted for counting objects and estimating their speed.

- Face Detection: The model can be used or adapted for face detection tasks.

- Any application requiring fast and accurate object detection.