llm.c Project Description

What is the project about?

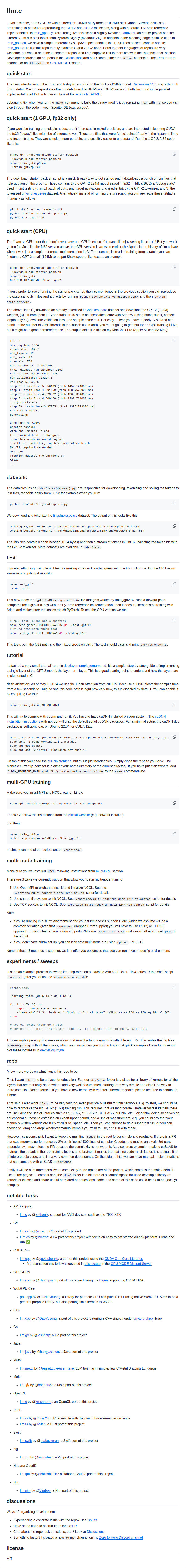

The project, llm.c, is focused on implementing Large Language Models (LLMs) in pure C and CUDA, without relying on large frameworks like PyTorch or cPython. It aims to reproduce models like GPT-2 and GPT-3, with a focus on pretraining.

What problem does it solve?

It provides a more lightweight and potentially faster alternative to existing LLM frameworks. It reduces dependencies and offers a cleaner, more accessible codebase for educational purposes and potentially for performance optimization. It also serves as a platform for exploring and developing custom CUDA kernels for LLM components.

What are the features of the project?

- GPT-2 and GPT-3 Reproduction: Aims to reproduce the architecture and training of these models.

- Pure C/CUDA Implementation: Avoids large dependencies, making the code more portable and understandable.

- CPU and GPU Support: Includes both a simple CPU reference implementation and optimized CUDA code for GPUs.

- Mixed Precision Training: Supports mixed-precision training for improved performance.

- Multi-GPU and Multi-Node Training: Enables scaling training across multiple GPUs and nodes using MPI and NCCL.

- Flash Attention: Integrates Flash Attention from cuDNN for faster attention computation (optional).

- Unit Tests: Includes unit tests to ensure agreement between the C/CUDA code and a PyTorch reference implementation.

- Educational Resources: Provides tutorials and documentation to help users understand the implementation details.

- Dataset Handling: Includes scripts for downloading, tokenizing, and preparing datasets for training.

What are the technologies used in the project?

- C

- CUDA

- cuDNN (optional, for Flash Attention)

- MPI (for multi-GPU/multi-node training)

- NCCL (for multi-GPU/multi-node communication)

- OpenMP (for CPU multi-threading)

- Python (for data processing and reference implementation)

What are the benefits of the project?

- Lightweight and Minimal Dependencies: Easier to install and run, especially in environments with limited resources.

- Performance: Potentially faster than existing frameworks due to optimized C/CUDA code.

- Educational Value: Provides a clean and understandable codebase for learning about LLM implementation.

- Customization: Allows for easy modification and experimentation with different kernels and optimizations.

- Portability: The C code is more portable than Python-based frameworks.

What are the use cases of the project?

- Research and Development: Exploring new LLM architectures, training techniques, and optimizations.

- Education: Learning about the inner workings of LLMs and GPU programming.

- Low-Resource Environments: Running LLMs on devices with limited memory or processing power.

- Custom LLM Implementations: Building specialized LLMs for specific tasks or domains.

- Benchmarking: Comparing the performance of different LLM implementations and hardware.