Project: minbpe

What is the project about?

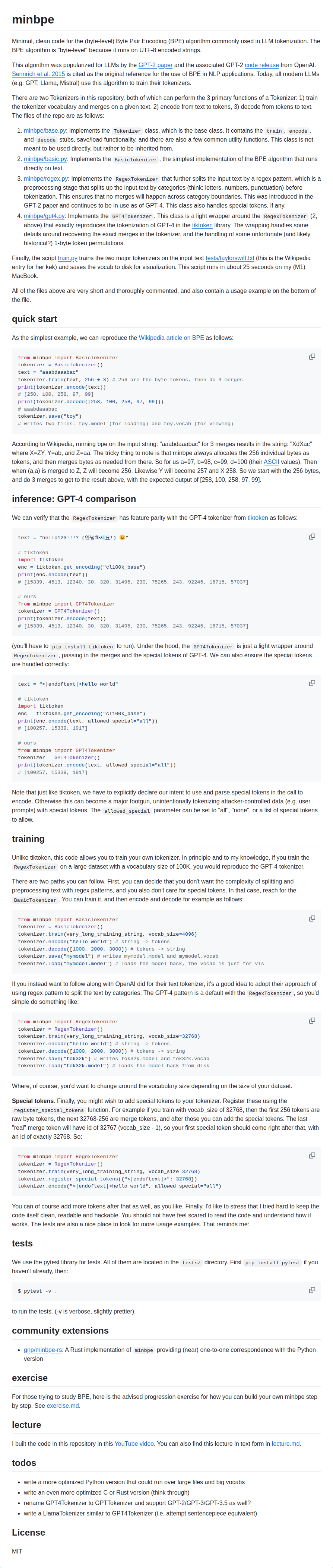

The project provides a minimal and clean implementation of the byte-level Byte Pair Encoding (BPE) algorithm, which is commonly used for tokenization in Large Language Models (LLMs).

What problem does it solve?

It offers a simplified, readable, and educational codebase for understanding and implementing the BPE algorithm, unlike more complex and opaque implementations. It allows users to train their own tokenizers, and encode/decode text. It also provides feature parity with GPT-4 tokenization.

What are the features of the project?

- Three Tokenizer Classes:

Tokenizer(base class): Defines common functionalities liketrain,encode,decode, save/load.BasicTokenizer: A simple BPE implementation that operates directly on text.RegexTokenizer: Splits input text using regex patterns before tokenization, similar to GPT-2/4, and handles special tokens.GPT4Tokenizer: reproduces GPT-4 tokenization.

- Training: Allows training of tokenizers on custom text data.

- Encoding: Converts text into a sequence of token IDs.

- Decoding: Converts token IDs back into text.

- Saving and Loading: Tokenizer models can be saved to and loaded from disk.

- Special Token Handling: Supports the use and management of special tokens (e.g.,

<|endoftext|>). - GPT-4 Compatibility: Includes a

GPT4Tokenizerthat replicates the behavior of the GPT-4 tokenizer in thetiktokenlibrary.

What are the technologies used in the project?

- Python

- UTF-8 encoding

- Regular Expressions (for

RegexTokenizer) - Pytest (for testing)

What are the benefits of the project?

- Educational: The code is clean, well-commented, and easy to understand, making it a great resource for learning about BPE.

- Hackable: The simplicity of the code encourages modification and experimentation.

- Reproducibility: Can reproduce GPT-4 tokenization.

- Customizable: Users can train their own tokenizers with different vocabulary sizes and special tokens.

- Lightweight: Minimal dependencies and a small codebase.

What are the use cases of the project?

- Learning: Understanding the inner workings of the BPE algorithm.

- Research: Experimenting with different tokenization strategies for LLMs.

- Development: Building custom tokenizers for specific NLP tasks or datasets.

- Prototyping: Quickly creating and testing tokenizers before implementing more optimized solutions.

- Reproducing Results: Replicating the tokenization process of models like GPT-4.